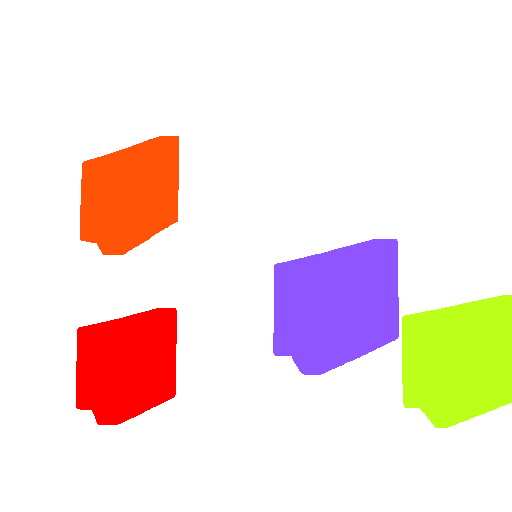

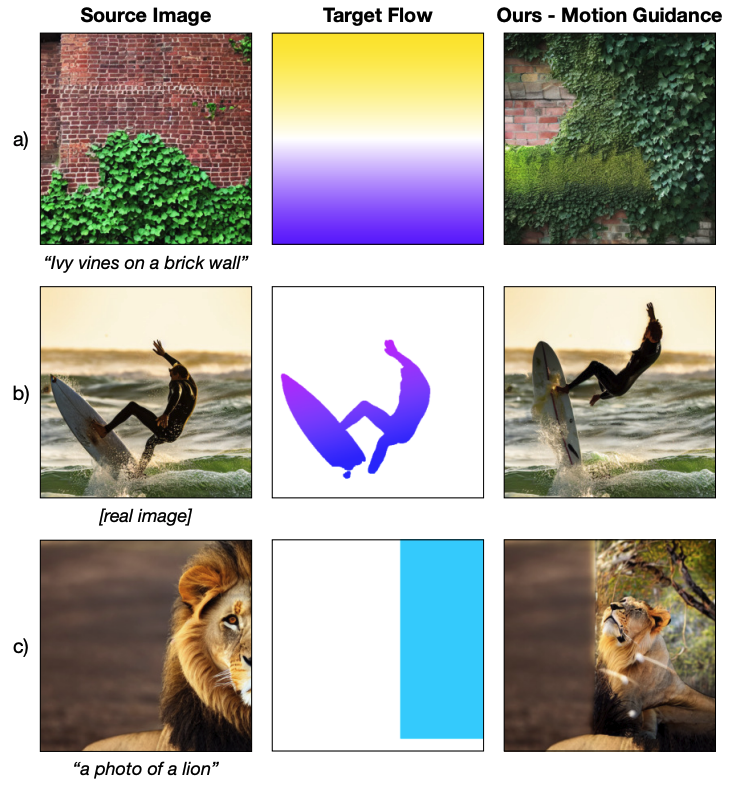

Examples

"a photo of a cat"

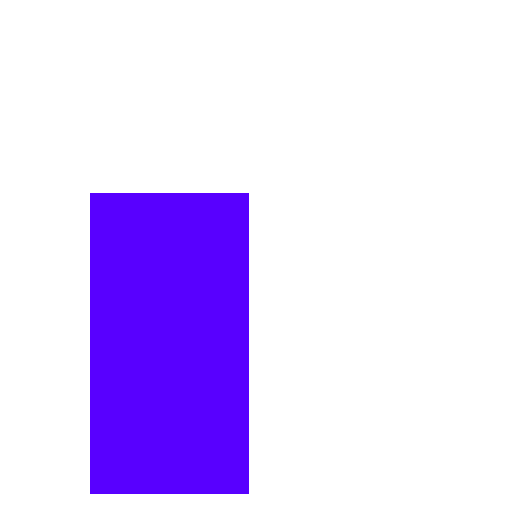

Target Flow

(Hover over me)

Source Image

(Hover over me)

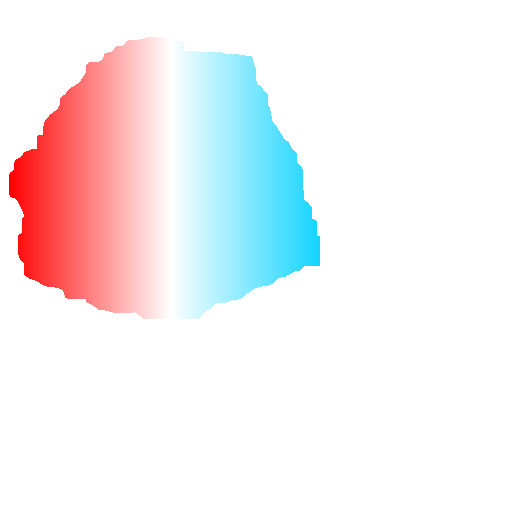

Motion Edited

"an apple on a wooden table"

Target Flow

(Hover over me)

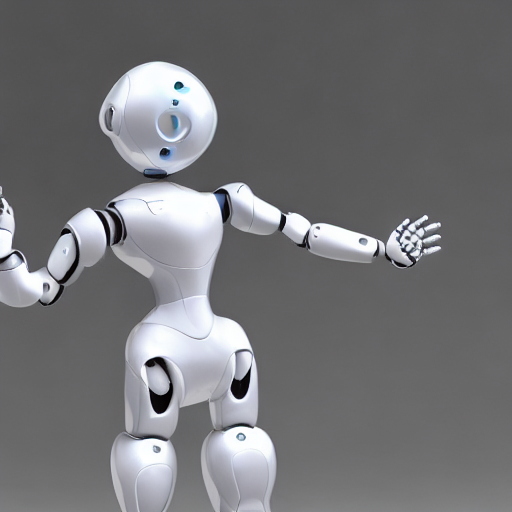

Source Image

(Hover over me)

Motion Edited

[real image]

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

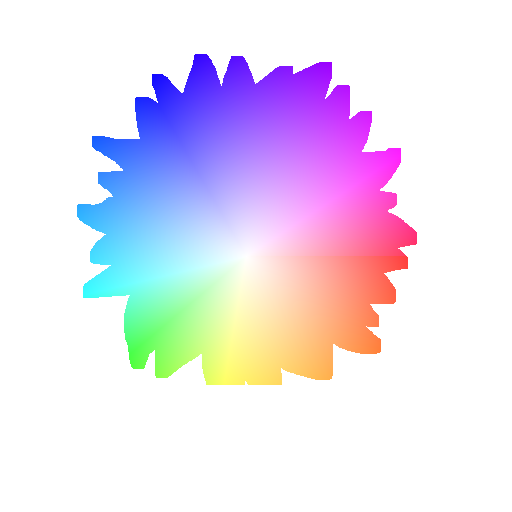

"a painting of a sunflower"

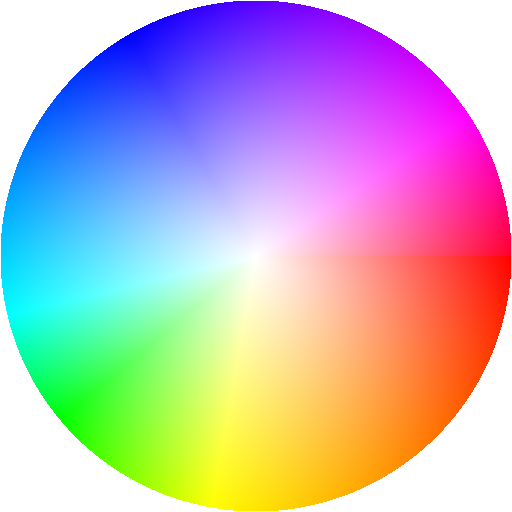

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

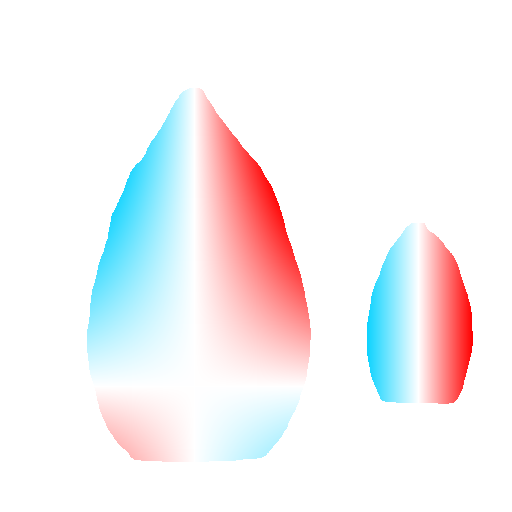

"a teapot floating in water"

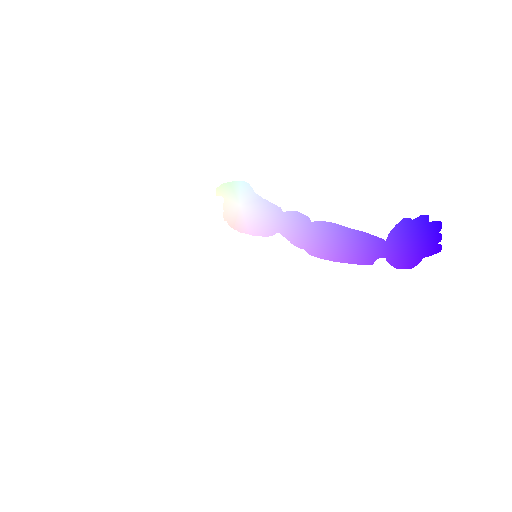

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

"a photo of a laptop"

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

"a photo of a topiary"

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

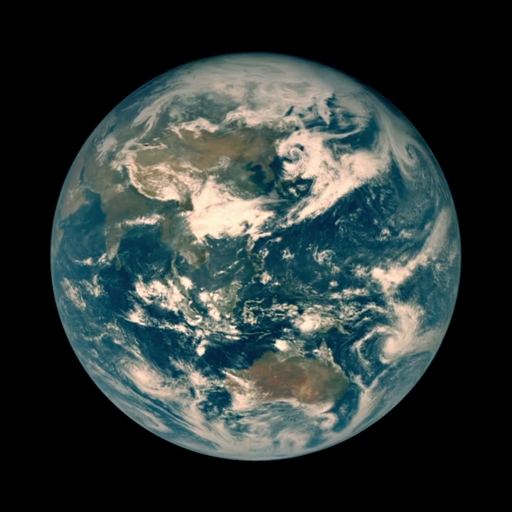

[real image]

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited

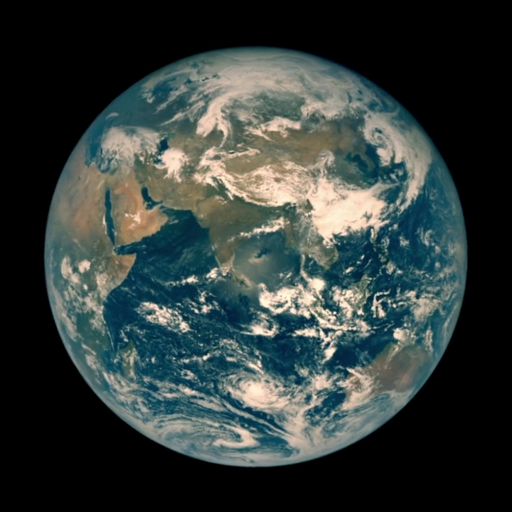

[real image]

Target Flow

(Hover over me)

Source Image

(Hover over me)

Motion Edited